Covid-19

Background

I was on the International Society for Quantitative Ethnography (ISQE) COVID-19 Data Challenge organization committee. And to promote the data challenge, I created a working example for how Quantitative Ethnography can be used to analyze COVID-19 data and provide insights into how people are thinking about the global pandemic.

I used a dataset of U.S. CDC tweets from Feb, March, and part of April 2020 that I downloaded from narcisoyu’s github. I added some variables and then coded the tweets for 6 themes, used the nCoder webtool to validate the codes (thanks to Jais Brohinsky and Kamila Misiejuk), and used the Epistemic Network Analysis webtool to visualize how the CDC’s information to the public changed as the global pandemic continued.

## Prepping the Data

I used the following packages and removed sci notation (it was driving me crazy!).

library(tidyverse)

library(tidytext)

library(topicmodels)

options(scipen=999)This is a tibble (a quick look) at the dataset. All files can be found in my covid repository.

tweets## # A tibble: 234 x 37

## created_at Date Week hashtags media urls favorite_count id

## <chr> <chr> <chr> <chr> <chr> <chr> <dbl> <dbl>

## 1 Sat Feb 0… 1-Feb week… coronav… http… http… 723 1.22e18

## 2 Sat Feb 0… 1-Feb week… CDC 201… http… http… 424 1.22e18

## 3 Sun Feb 0… 2-Feb week… HCPs co… http… http… 284 1.22e18

## 4 Sun Feb 0… 2-Feb week… 2019nCo… http… http… 490 1.22e18

## 5 Sun Feb 0… 2-Feb week… 2019nCo… http… http… 690 1.22e18

## 6 Mon Feb 0… 3-Feb week… flu http… http… 205 1.22e18

## 7 Mon Feb 0… 3-Feb week… fluvacc… http… http… 439 1.22e18

## 8 Tue Feb 0… 4-Feb week… <NA> <NA> <NA> 2 1.22e18

## 9 Tue Feb 0… 4-Feb week… <NA> <NA> <NA> 1 1.22e18

## 10 Tue Feb 0… 4-Feb week… Coronav… <NA> <NA> 0 1.22e18

## # … with 224 more rows, and 29 more variables:

## # in_reply_to_screen_name <chr>, in_reply_to_status_id <dbl>,

## # in_reply_to_user_id <dbl>, lang <chr>, place <lgl>,

## # possibly_sensitive <lgl>, retweet_count <dbl>, reweet_id <dbl>,

## # retweet_screen_name <chr>, source <chr>, text <chr>, tweet_url <chr>,

## # user_created_at <chr>, user_screen_name <chr>,

## # user_default_profile_image <lgl>, user_description <chr>,

## # user_favourites_count <dbl>, user_followers_count <dbl>,

## # user_friends_count <dbl>, user_listed_count <dbl>,

## # user_location <chr>, user_name <chr>, user_screen_name_1 <chr>,

## # user_statuses_count <dbl>, user_time_zone <lgl>, user_urls <chr>,

## # user_verified <lgl>, month <chr>, year <dbl>I added a year and month column

tweets <- tweets %>%

mutate(month = substring(created_at, 5, 7)) %>%

mutate(year = substring(created_at, nchar(created_at)-3, nchar(created_at))) %>%

filter(year == "2020") %>%

filter(month == "Feb" | month == "Mar" | month == "Apr")## Coding the Data To explore the dataset beyond just reading the tweets, I tried word frequencies,

tweets.words <- tweets %>%

select(text) %>%

unnest_tokens(word, text) %>%

anti_join(stop_words) %>%

filter(word != "https") %>%

filter(word != "t.co") %>%

filter(word != "rt") %>%

filter(word != "cdc") %>%

filter(word != "covid19") %>%

filter(word != "covid") %>%

filter(word != "coronavirus") %>%

filter(word != "amp") %>%

count(word, sort = TRUE)

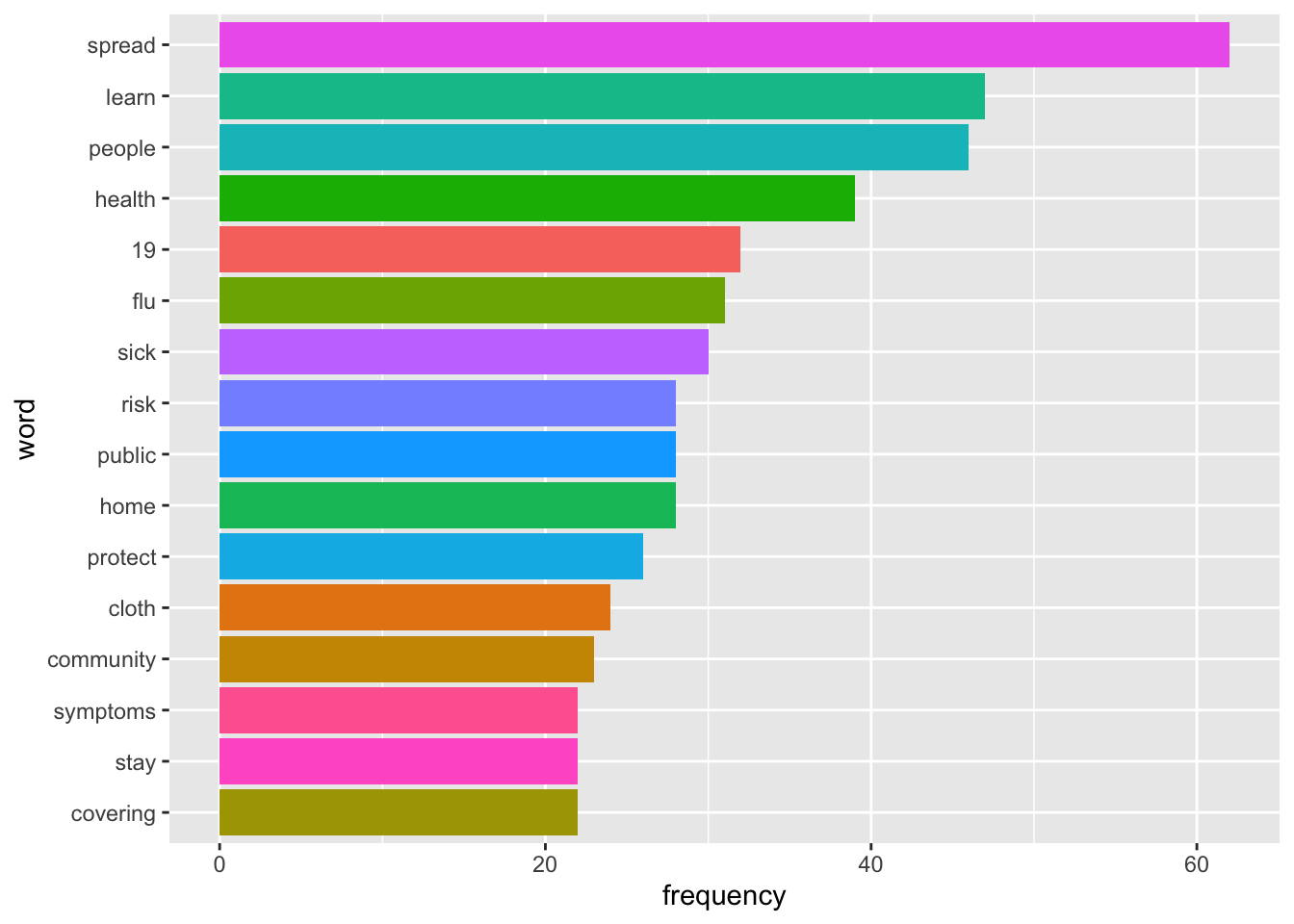

tweets.words %>%

top_n(15) %>%

ggplot(aes(fct_reorder(word, n), n, fill = word)) +

geom_col(show.legend = FALSE) +

labs(x = "word", y = "frequency") +

coord_flip() bigrams,

bigrams,

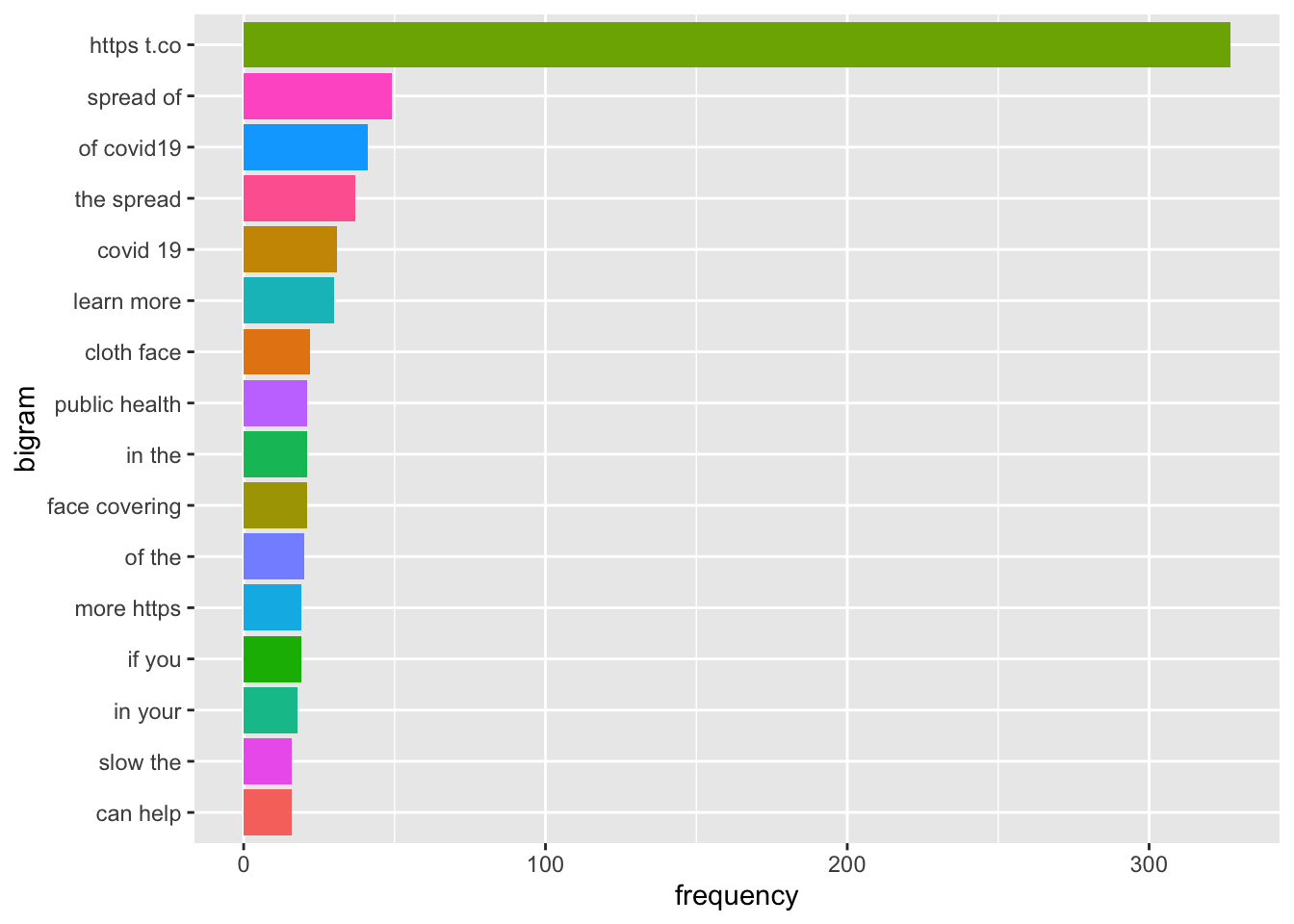

tweets.bi <- tweets %>%

select(text) %>%

unnest_tokens(bigram, text, token="ngrams", n = 2) %>%

count(bigram, sort = TRUE)

tweets.bi %>%

top_n(15) %>%

ggplot(aes(fct_reorder(bigram, n), n, fill = bigram)) +

geom_col(show.legend = FALSE) +

labs(x = "bigram", y = "frequency") +

coord_flip() and even topic modeling with LDA.

and even topic modeling with LDA.

##create document term matrix

tweets.dtm <- tweets %>%

select(text,id) %>%

unnest_tokens(word, text) %>%

anti_join(stop_words) %>%

count(word, id, sort = TRUE) %>%

ungroup() %>%

filter(word != "https") %>%

filter(word != "t.co") %>%

filter(word != "rt") %>%

filter(word != "cdc") %>%

filter(word != "covid19") %>%

filter(word != "covid") %>%

filter(word != "coronavirus") %>%

cast_dtm(id, word, n)

##take out tweets with no terms so LDA doesn't fail

rowTotals <- apply(tweets.dtm, 1, sum)

tweets.dtm <- tweets.dtm[rowTotals > 0 , ]

##run LDA

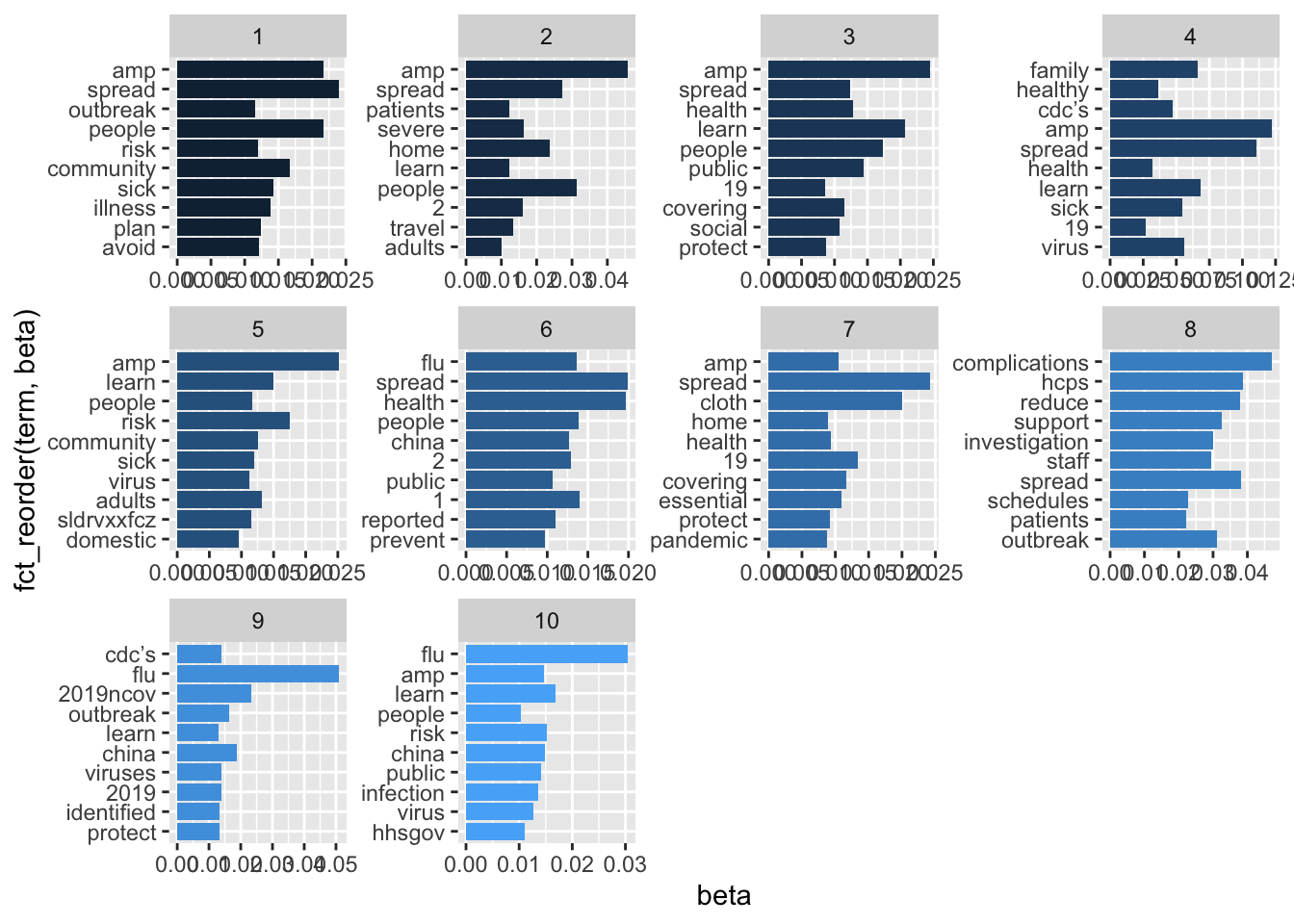

tweets.lda <- LDA(tweets.dtm, k = 10, control = list(seed = 1234))

tweets.lda.tidy <- tidy(tweets.lda)

##Show top words in each topic

tweets.lda.top <- tweets.lda.tidy %>%

group_by(topic) %>%

top_n(10, beta) %>%

arrange(topic, -beta) %>%

ungroup()

##Plot top words in each topic

tweets.lda.top %>%

mutate(term = reorder(term,topic)) %>%

ggplot(aes(fct_reorder(term, beta), beta, fill = topic)) +

geom_col(show.legend = FALSE) +

facet_wrap(~ topic, scales = "free") +

coord_flip() It wasn’t very helpful, but it did point out the most frequently used words and phrases. So, I went back to the data with this information to find common themes in the CDC tweets. ## Coding the Data

It wasn’t very helpful, but it did point out the most frequently used words and phrases. So, I went back to the data with this information to find common themes in the CDC tweets. ## Coding the Data

I developed five codes:

| Code | Definition | Sample Keywords |

|---|---|---|

| People | Referring to different populations of people affected by covid-19 | People, child, adult, elderly |

| Positive Action | Referring to an action that people can take to cope with or stay safe from covid-19 | Social distanc, #socialdist, reduce, disinfect |

| Infection and Spread | Referring to the spread, transmission of or exposure to covid-19. Also refers to explicitly referring to someone who is sick with the virus or may potentially become sick with covid-19. | Spread, close contact, widespread, transmission |

| Alarm | Using language that conveys complications, those who are at a more serious risk, death, or sense of alarm related to covid-19. | Risk, death, emergency, threat, expose, severe, complication, serious |

| Global | Referring to other countries or global effects of covid-19 beyond the U.S. | China, traveler, global, world, Korea, Japan, Congo |

And developed a full set of keywords and regular expressions based on the definitions of the codes that were validated in nCoder. Not all codes passed the statistical threshold, so this is still a work in progress.

Below is a function I wrote for coding the data. It takes the keyword file as an input and if the tweet contains the keyword, it will place a 1 in that column and if the tweet does not contain the keyword, it will place a 0 in that column.

##this is a function for coding

code = function(keywordtable, colnum, content) {

code <- unlist(paste(na.omit(keywordtable[,colnum]), collapse = "|"))

out = ifelse(grepl(code, content, ignore.case = TRUE, perl = TRUE),1,0)

return (out)

}

##code tweets

tweets$people <- code(keywords, 1, tweets$text)

tweets$spread <- code(keywords, 2, tweets$text)

tweets$alarm <- code(keywords, 3, tweets$text)

tweets$positive.action <- code(keywords, 4, tweets$text)

tweets$global <- code(keywords, 5, tweets$text)Visualizing the Data

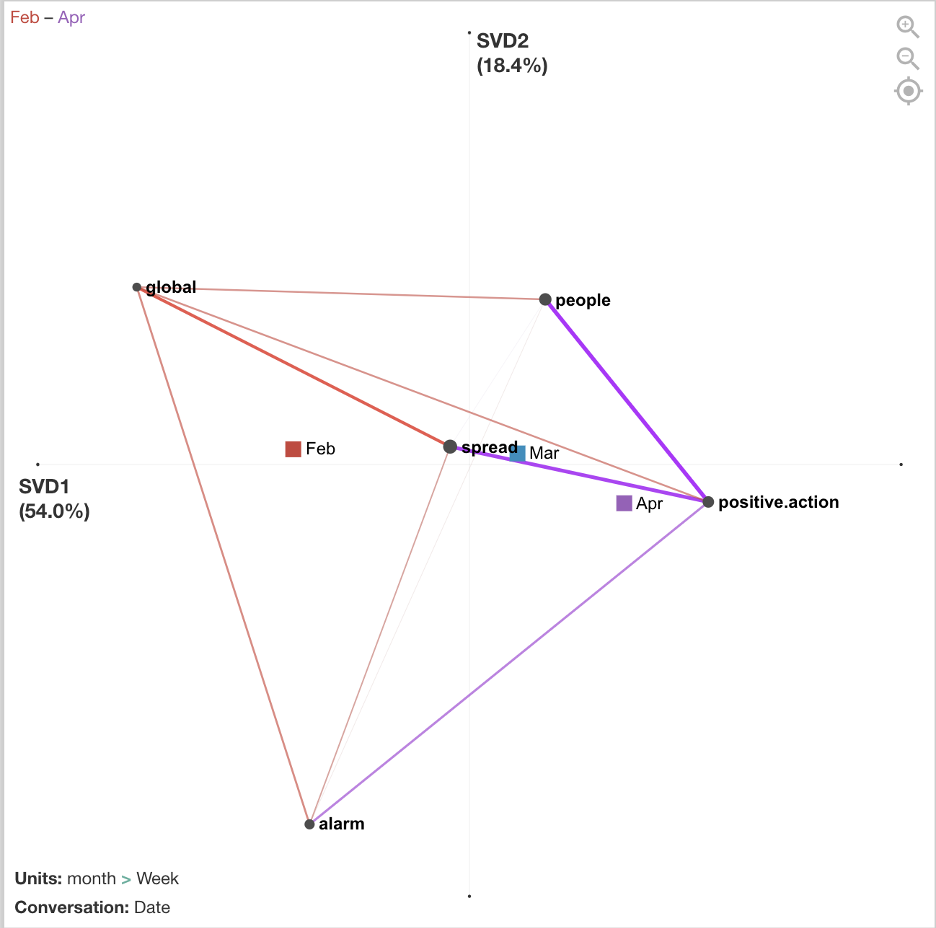

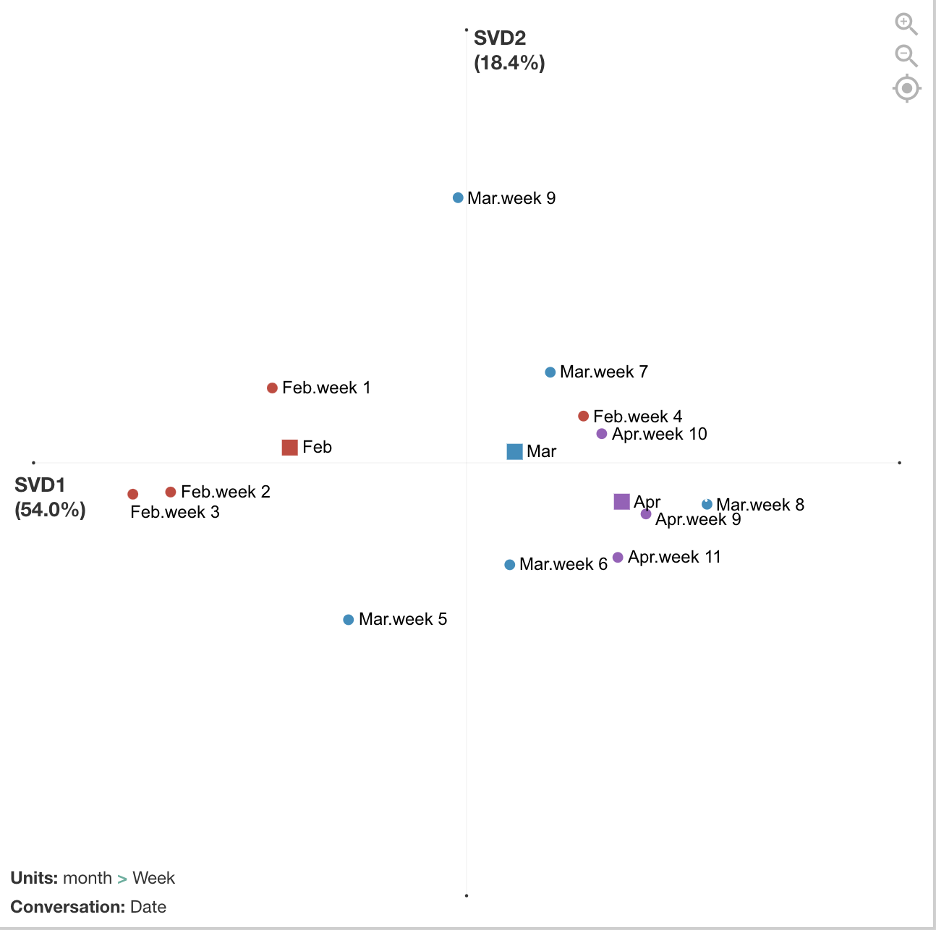

After the data was coded, I exported the file as a csv and imported it into the ENA webtool.Stanzas (segmentations for how co-occurrences are counted) were by date. Sometimes there was one tweet on a date and sometimes there were several tweets. Units (individual networks) were accumulated by week and then grouped into red, blue, and purple networks by month.

The figure below compares the average networks for Feb and Apr and shows the average centroids for all three months. The differences are not significant if we run statistics on the number of weeks (due to small sample size/low power), but the effect is relatively large (r = 1.0 on x-axis). Future analyses could examine statistics on a smaller grain size of networks, such as date or single tweets, to increase sample size.

There are differences between February, March, and April in terms of how the CDC tweets were conveying information to the public, and the mean centroids show a trajectory across the space. In February, tweets focused more on people involved in the global spread of COVID-19. In March, tweets focused more on what positive actions people could take to cope with and reduce spread of COVID-19. April continued to focus on positive actions to reduce spread but included more alarming tweets about the severity and deaths.

Below are examples of tweets from each month that match with the interpretation above.

| Example of Feb 21 (week 3) Tweet | There are now 2 categories of #COVID19 cases in the US: 1) Cases detected through the domestic public health systems, and 2) cases among people who were repatriated via @StateDept flights from Wuhan (China) & from the Diamond Princess cruise ship (Japan). https://t.co/1ifchVQ9jm. |

|---|---|

| Example of March 17 (week 7) Tweet | While #socialdistancing for #COVID19, take the time to check in on friends and family to see how they are doing. Set up a daily phone or video call to touch base and share the best and most challenging parts of the day. #TogetherApart https://t.co/KyBmehvGcY https://t.co/rN1RmYS9i0 |

| Example of April 14 (week 11) Tweet | Did you know that adults 65 and older and those with an underlying medical condition are at higher risk for getting seriously ill from #coronavirus? Learn how to slow the spread of coronavirus and protect your loved ones at https://t.co/iH2iReYrBq. #COVID19 https://t.co/1KnsDJSJY3 |

The figure below breaks out the networks by each week. February includes weeks 1 - 4, March includes weeks 5 - 9, April includes 9 - 11. This visualization shows more detailed trajectories on how the CDC tweets’ focus changed week by week. For example, in week 9 at the end of March, those tweets look more similar to the February tweets that had a global/travel focus. This was because many additional travel advisories went into effect during this week by the U.S. government.

Additional possible analyses include exploring metadata from this dataset such as retweets, favorited/popular tweets, or media included in tweets. Also, end of April tweets were not included in this analysis and are now available for download and could be added. In addition, this tweet data could be correlated with metadata from other sources such as general tweets with #Covid19 hashtag, containment and mitigation measures, number of infections, etc.